Constrained Decoding for Robot Foundation Models

How lessons from language models inspired a new way to make robot foundation models provably safe.

Key Question: How can we enforce contextual user rules such as “don’t approach the bedroom if you have food in hand” for robot foundation models, even when the models weren’t explicitly trained for them?

Our Approach: A constrained decoding method for robot foundation models that enforces contextual safety rules at inference time without retraining.

Recent Robot Foundation Models (RFMs) such as SPOC, FLaRe, PoliFormer, and OpenVLA map multimodal inputs (RGB, language, proprioception) directly to action sequences and generalize well across navigation and manipulation tasks. Trained on large language-conditioned trajectory datasets, they achieve strong zero-shot transfer on diverse goals such as object-centric tasks ("find a mug"), spatial tasks ("visit all rooms"), and attribute-conditioned variants ("locate the chair closest to the refrigerator"). While these models demonstrate robust real-world performance, they remain purely data-driven and have no explicit notions of safety. For instance, they cannot reliably enforce contextual constraints such as “avoid entering the bedroom while carrying food.” To deploy these policies out in the wild, we need methods beyond depending on implicit biases of pretraining data.

A possible way to enforce these rules could be through fine tuning a pretrained model on safe demonstrations. However, retraining is expensive and can’t ensure provable safety due to model stochasticity. To overcome this challenge, We need an inference-time approach to enforce safety requirements.

Background on structured outputs for LLMs

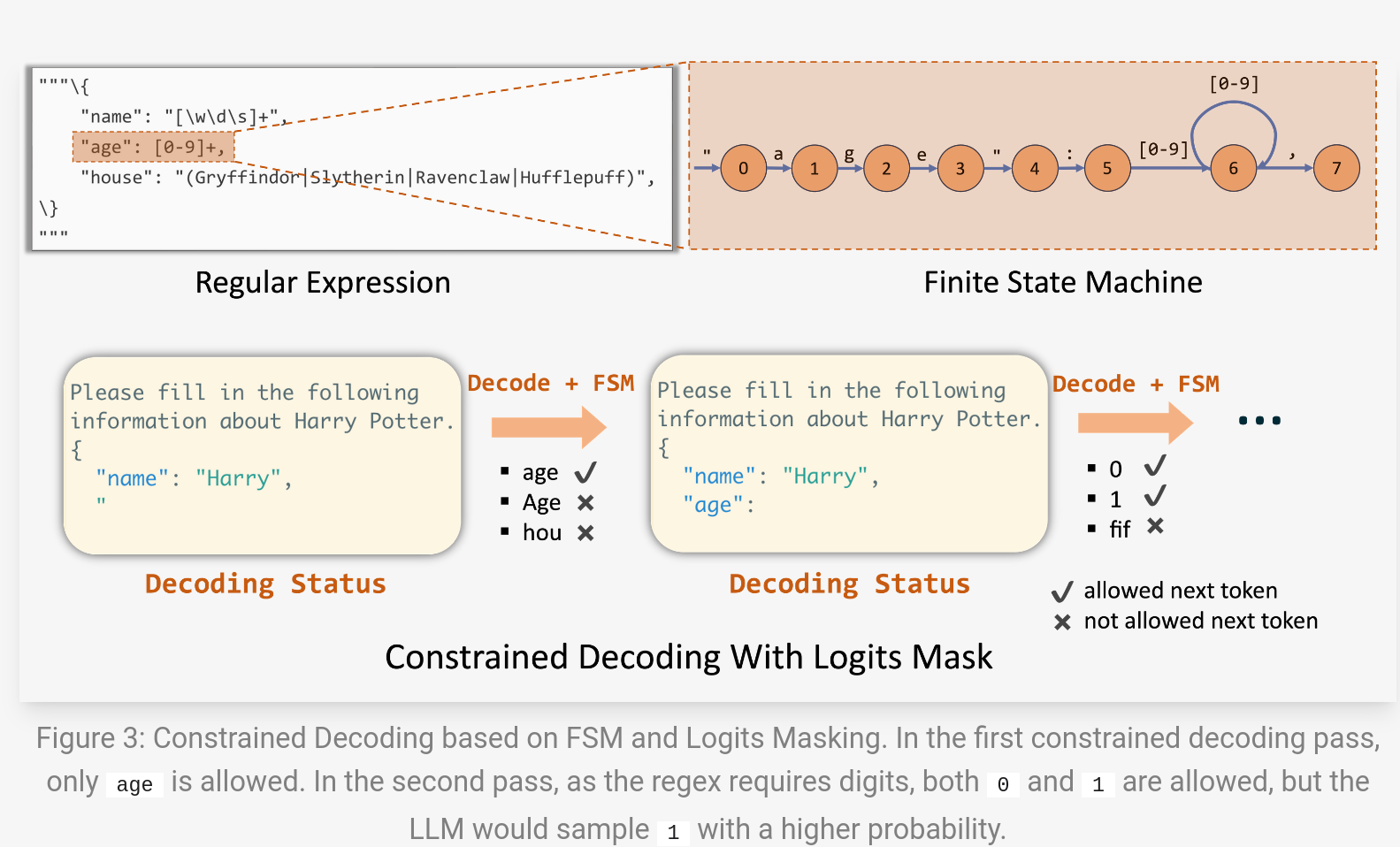

For language models (LLMs), syntactic correctness such as following JSON schemas can be enforced at inference time without finetuning. Constrained decoding ensures generated sequences satisfy syntactic or structural rules without retraining. Early beam search based approaches would prune tokens that violate constraints [1], while recent frameworks enable grammar- and program-aligned outputs via lightweight runtime control [2], [3]. Follow-on work extends this to regex schemas and context-free grammars [4], [5].

In all these methods, the core principle is the same: mask invalid next-tokens before they’re produced, pruning probability mass of illegal continuations so the model outputs well-formed text (e.g., JSON) [2], [4].

Key insight: If we can prune invalid completions in LLMs, could we prune unsafe action sequences for RFMs? Since these RFMs are largely autoregressive transformers, can we apply similar ideas from constrained decoding?

The catch: A large class of robotics constraints are temporal and defined over state-based trajectories (e.g, avoid going through an unsafe region at all times). Additionally, most current RFMs output an action token. Thus, unlike LLMs, constraint checking can’t happen purely in token space—it needs forward simulation (a dynamics stepping function) to evaluate specification satisfaction as actions unfold [6].

Large Language Models (LLMs)

Constrained decoding for RFMs

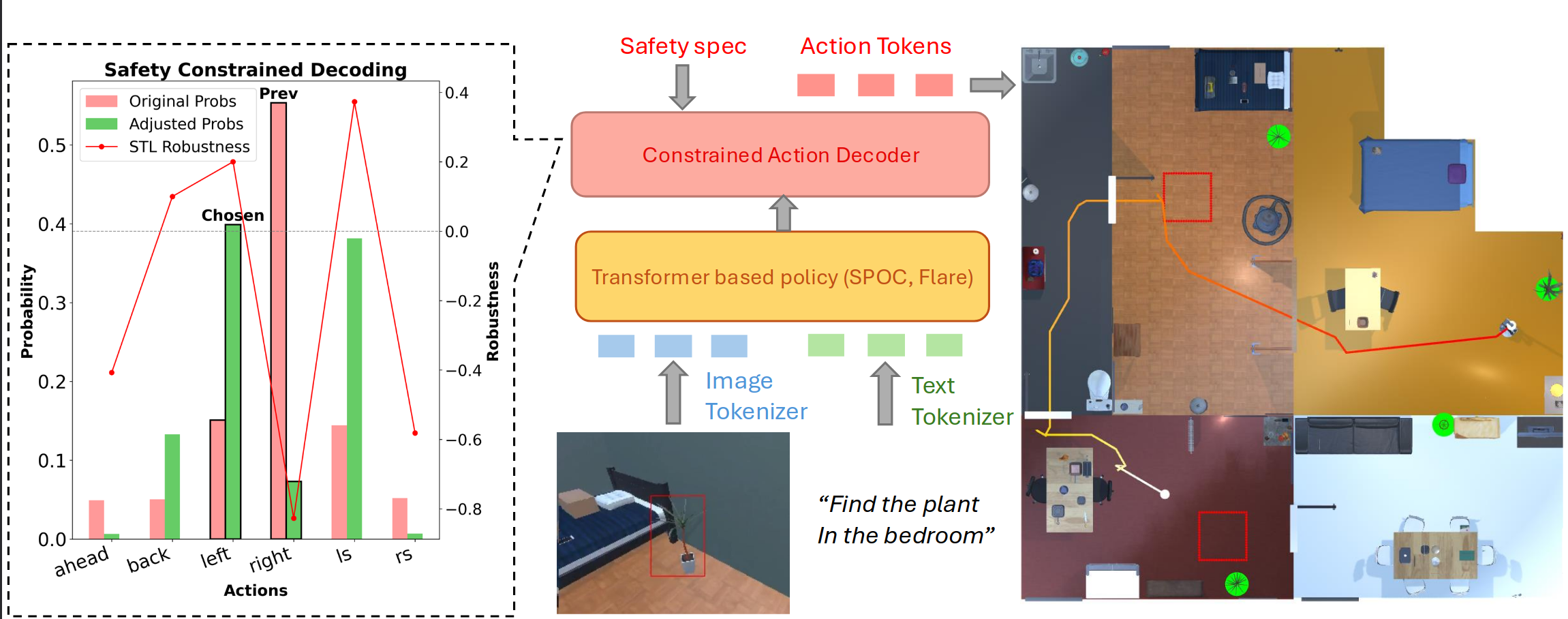

We take the constrained-decoding principle and apply it to enforce safety specifications over state trajectories. Our safety rules are captured by Signal Temporal Logic (STL), a language defined over continuous signals from dynamical systems. We propose safety specification aligned decoding (SafeDec) that simulates candidate actions with an approximate dynamics model and evaluates STL satisfaction in real time, directly inside the decoding loop [6]. Given an STL rule \( \varphi \) and a lightweight dynamics model \( f(x_t, a_t) \), each candidate next-action is forecasted and either masked (HCD) if it leads to a violation, or reweighted (RCD) by its satisfaction score to steer toward safer behavior—inside the decoder loop [7].

SafeDec sits between the policy’s final logits and action selection. Given an STL spec φ and a lightweight dynamics model, it either masks or reweights action logits at each step so that the sampled action respects safety now—and in the near future.

Hard Constrained Decoding (HCD)

If a candidate action’s predicted next state would violate φ, set its logit to −∞ (i.e., zero probability) before softmax. This yields provable compliance under the assumed dynamics.

Robustness Constrained Decoding (RCD)

Compute STL satisfaction score (robustness) ρ for each candidate’s predicted successor state and convert it to a weight that shifts the logits—boosting safer actions and suppressing risky ones (with tunable strength). This preserves task performance while greatly reducing violations.

SafeDec is model-agnostic: it only needs (1) access to decoder logits and (2) an approximate dynamics function. STL evaluation is done efficiently via STLCG++ for real time inference.

Sample Visualisations

Results at a Glance

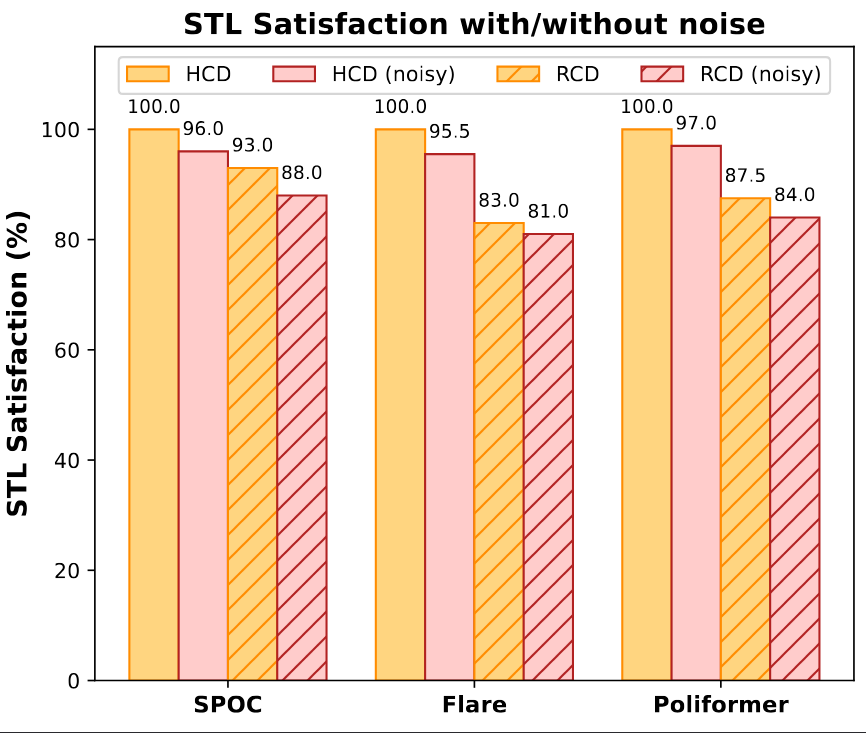

Evaluated on hundreds of procedurally generated AI2-THOR scenes with three SOTA policies (SPOC, Flare, PoliFormer), SafeDec enforced two invariant specs: ϕavoid (never enter forbidden zones) and ϕgeofence (stay within allowed regions).

- Unconstrained: Only ~68–78% geofence and ~72–77% avoid satisfaction.

- HCD: ~100% spec satisfaction across models/specs, with a modest 5–10% success drop vs. baseline.

- RCD: ~80–95% spec satisfaction with success rates close to unconstrained (often within 1–3%); better safety–performance trade-off than HCD.

Ablations

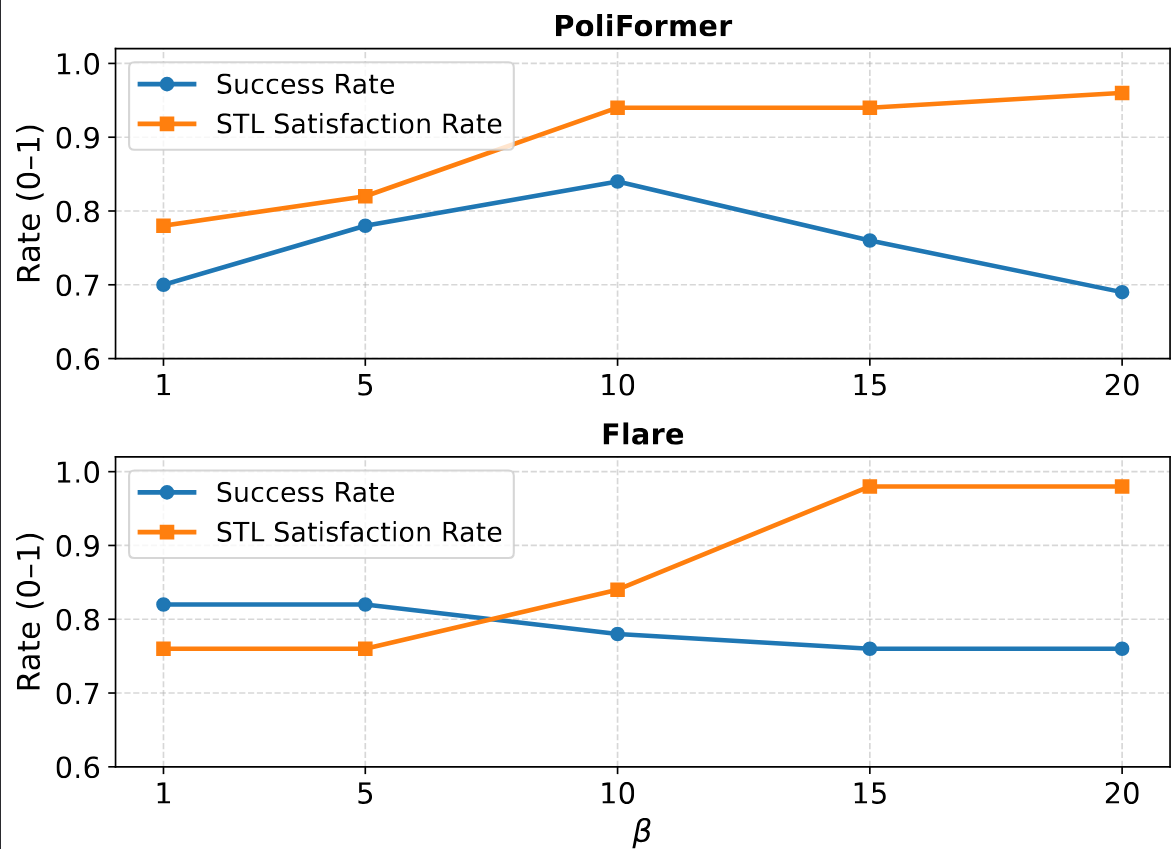

Since we assume a simple dynamics model (unicycle) for generating states from proposed actions, we evaluate the impact of noisy dynamics on final satisfaction. Additionally, we also sweep over the beta parameter for RCD, which controls how much specification satisfaction should be prioritized over task performance.

SafeDec remains effective under dynamics noise; both HCD and RCD degrade gracefully. For our β ablation, we observe that as β increases for PoliFormer, both STL satisfaction and success rate improve in tandem until β = 10, suggesting that moderate regularization can actually aid policy execution. Beyond this, STL satisfaction continues to improve but at the cost of lower success rates. For Flare, larger β values improve STL satisfaction but reduce success rates. These results highlight that the influence of β is model-dependent but in general demonstrate that SafeDec provides a tunable mechanism to balance safety and performance objectives.

Conclusion

In this post, we explored a constrained decoding framework that brings safety guarantees to large transformer-based robot policies. By enforcing safety specifications directly at inference time, our approach enables real-time adaptation to new rules and environments without any retraining.

We’re excited about how this line of work can make foundation models more reliable in the wild. Stay tuned as we share more results, open-source code, and demos soon!

Papers

References

- Hokamp, C., & Liu, Q. (2017). Lexically Constrained Decoding for Sequence Generation (Grid Beam Search). ACL.

- Willard, B., & Louf, R. (2023). Guidance: A Language Model Control Framework. GitHub: guidance-ai/guidance.

- Beurer-Kellner, L., et al. (2023). Prompting is Programming: A Query Language for LLMs. PLDI. DOI: 10.1145/3591300.

- Welleck, S., et al. (2024). From Decoding to Meta-Generation: Inference-Time Algorithms for LLMs. arXiv: 2406.16838.

- Park, K., et al. (2024). Grammar-Aligned Decoding. NeurIPS. paper.

- SafeDec paper (this work): LLM vs RFM constraint contrast; need for dynamics stepping for spec checking (see §2.2 and §3.1).

- SafeDec paper (this work): HCD masking by setting logits to −∞ for spec-violating actions; RCD reweighting by STL robustness (see §3.2–§3.3).

- SafeDec paper (this work): Model-agnostic, inference-time STL enforcement without retraining; overall vision (Intro & §3).